Ruoyan

July 18, 2022, 2:58pm

1

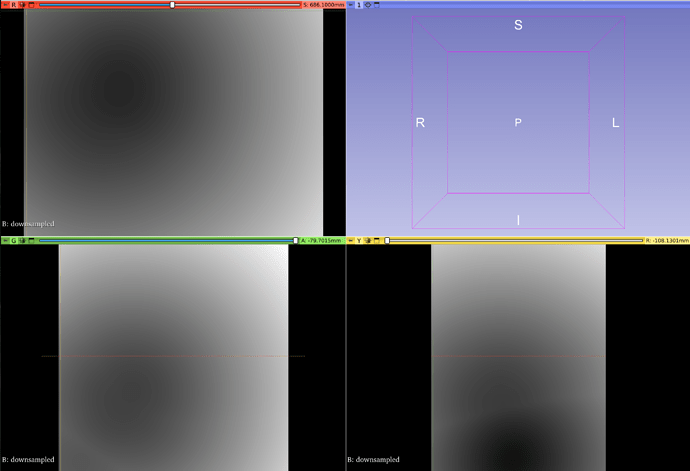

Hi,50*50*50 and then upsample it back to the original size. After I processed this with two ResampleImageFilter, the new upsampled image has 2*z_size*y_size black/empty pixels at the end of x-direction.

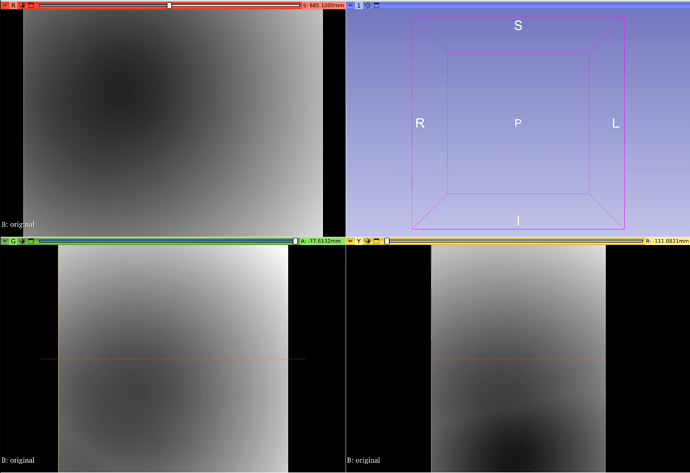

Downsampled look:

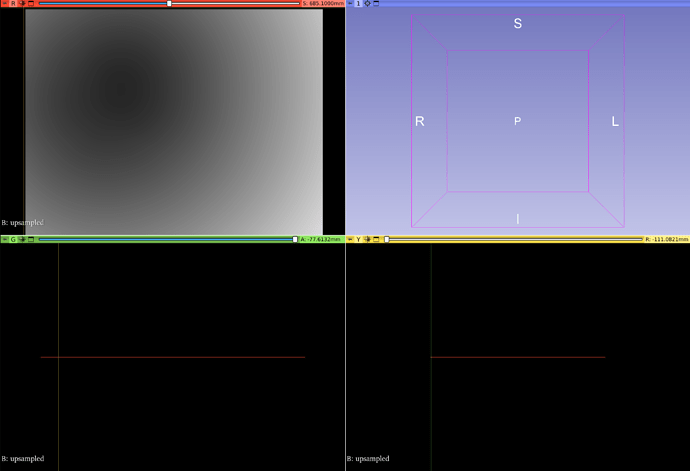

Upsampled look:

Any idea what could lead to this problem? Thanks a lot!

dzenanz

July 18, 2022, 3:07pm

2

You might be assuming that pixel coordinates are in the corner. They are at the center of the pixel . You might need to adjust your math to account for this.

Ruoyan

July 18, 2022, 3:36pm

3

Thanks for the quick reply. In my case, the origin of these 3 images are the same. If I understand correctly, for the upsampled image it should have the same size and physical coordinates as the original image. Am I correct?

dzenanz

July 18, 2022, 3:49pm

4

What is the original resolution? Can you share your code?

Ruoyan

July 18, 2022, 5:28pm

5

Hi, here is the code

// GET ORIGINAL IMAGE INFO

auto inputImage = imageAReader->GetOutput();

auto outputOrigin = inputImage->GetOrigin();

auto outputDirection= inputImage->GetDirection();

auto inputSizePixels = inputImage->GetLargestPossibleRegion().GetSize();//[279,212,89]

auto inputSpacing = inputImage->GetSpacing();

double inputSize[3] = {inputSpacing[0] * inputSizePixels[0],

inputSpacing[1] * inputSizePixels[1],

inputSpacing[2] * inputSizePixels[2]};

auto _pTransform = T_Transform::New();

_pTransform->SetIdentity();

typename InterpolatorType::Pointer interpolator = BSplineInterpolatorType::New();

// DOWNSAMPLING

auto imageResampleFilterD = ResampleImageFilterType::New();

typename ImageType::SizeType outputSizeD = {static_cast<SizeValueType>(50),static_cast<SizeValueType>(50),static_cast<SizeValueType>(50)};

typename ImageType::SpacingType outputSpacingD;

outputSpacingD[0] = inputSize[0] / static_cast<float>(50);

outputSpacingD[1] = inputSize[1] / static_cast<float>(50);

outputSpacingD[2] = inputSize[2] / static_cast<float>(50);

imageResampleFilterD->SetInput(imageAReader->GetOutput());

imageResampleFilterD->SetOutputOrigin(outputOrigin);

imageResampleFilterD->SetOutputSpacing(outputSpacingD);

imageResampleFilterD->SetOutputDirection(outputDirection);

imageResampleFilterD->SetInterpolator(interpolator);

imageResampleFilterD->SetSize(outputSizeD);

imageResampleFilterD->SetTransform(_pTransform);

imageResampleFilterD->Update();

// UPSAMPLING

auto imageResampleFilterU = ResampleImageFilterType::New();

imageResampleFilterU->SetInput(imageResampleFilterD->GetOutput());

imageResampleFilterU->SetOutputOrigin(outputOrigin);

imageResampleFilterU->SetOutputSpacing(inputSpacing);

imageResampleFilterU->SetOutputDirection(outputDirection);

imageResampleFilterU->SetInterpolator(interpolator);

imageResampleFilterU->SetSize(inputSizePixels);

imageResampleFilterU->SetTransform(_pTransform);

imageResampleFilterU->Update();

dzenanz

July 18, 2022, 6:57pm

6

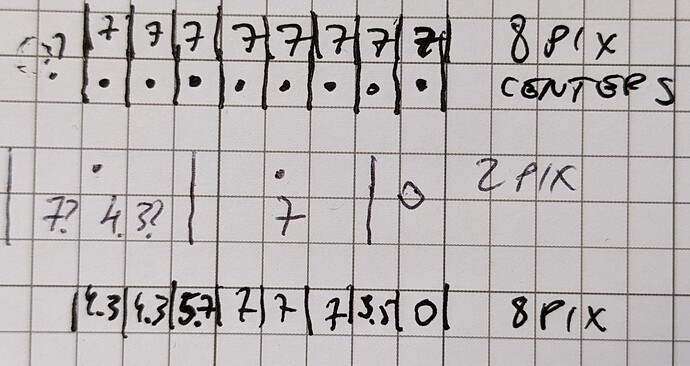

Consider 1D case of 8 pixels:

First row is the original image, filled with 7s.

Second row is down-sampled to 2 pixels.

Third row is expansion back to 8 pixels.

You need to adjust the origin to keep the same image extent. This becomes obvious when you go back to your original image grid.

Ruoyan

July 18, 2022, 9:33pm

7

Thanks a lot! This explains why in our case we lost some image data. I’ll try to adjust the origin for each resample processes. Thanks again for your help🙌