Hello zivy,

Thank you for the clear answer, can I ask 4 more questions about this topic?

I learn from the SimpleITK B-spline registration tutorial, I use the following code in my experiment:

registration_method.SetInitialTransformAsBSpline(initial_transform,

inPlace=False,

scaleFactors=[1,2,4])

registration_method.SetMetricAsMeanSquares()

registration_method.SetMetricSamplingStrategy(registration_method.RANDOM)

registration_method.SetMetricSamplingPercentage(0.01)

registration_method.SetShrinkFactorsPerLevel(shrinkFactors = [4,2,1])

registration_method.SetSmoothingSigmasPerLevel(smoothingSigmas=[2,1,0])

registration_method.SmoothingSigmasAreSpecifiedInPhysicalUnitsOn()

registration_method.SetInterpolator(sitk.sitkLinear)

registration_method.SetOptimizerAsGradientDescent(learningRate=0.001, numberOfIterations=100,

convergenceMinimumValue=1e-8, convergenceWindowSize=10)

registration_method.SetOptimizerScalesFromPhysicalShift()

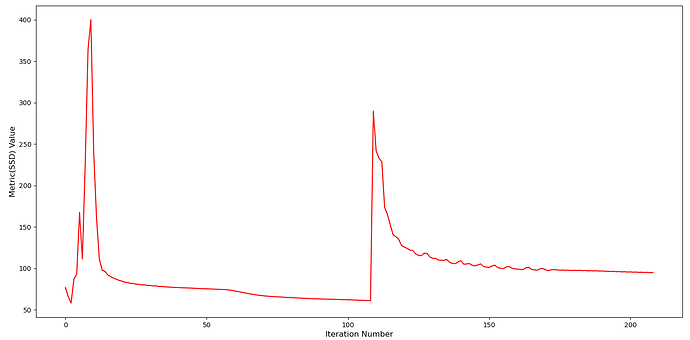

Q1: I am using multi-resolution/multi-scale registration with 3 scales (b-spline grid scaleFactors=[1,2,4] together with image shrinkFactors = [4,2,1] respectively, I mean one grid style with one image resolution. ), however, the metric value plot of my last post shows the registration only performed on 2 scales?

Q2: I redo the registration experiment on the same fixed_image and moving_image using exactly the same script. And I got this:

It seems the result SSD plot is quite different compared to my last post… how can I have more reproducible result?

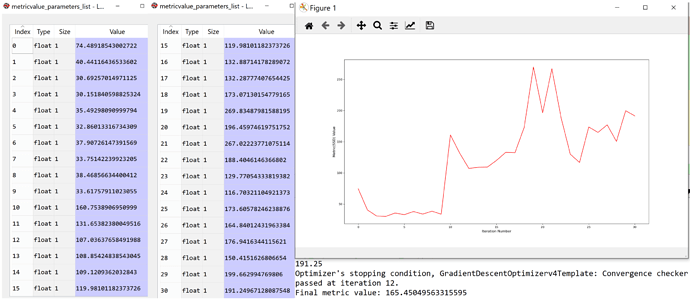

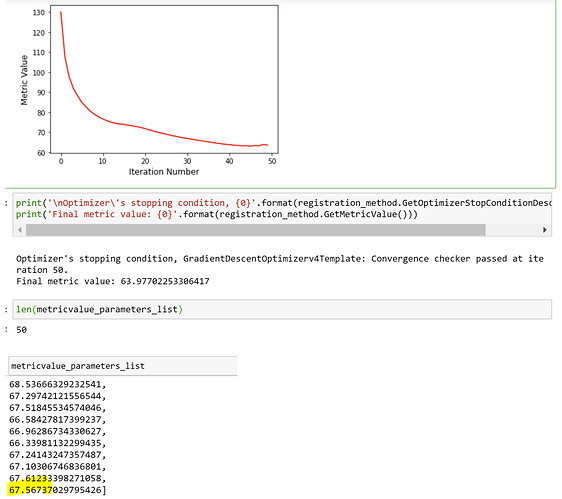

Q3: I record all the similarity metric values for all iterations that the registration runs, I only got 30 SSD values, but I set numberOfIterations=100, so is there an early stopping here? What does “Convergence checker passed at iteration 12” means?

Q4: It seems the registration stop at iteration 30th, does iteration 30th corresponding to obtaining minimum similarity metric value for the lowest pyramid level? If so, should the final metric value be 191? Instead, the final metric value shown as 165, which is closest to 164.8 that iteration 26th obtained. Does it means it should be iteration 26th corresponding to minimum value for the highest image resolution pyramid level? Will the final transformation take the registration result of iteration 26th?

Sorry that if my description was not clear enough. Many thanks.

!!

!!