I’m using the Similarity3DTransform for quite some time now, but I always find certain configurations where it totally fails to register two images. Usually with some manual pre-alignment it is then no problem.

I found that the registration falls into a local minimum, especially when the two images are rotated relative to each other.

I register binary masks, and here is a minimal working example which demonstrates the problem:

import SimpleITK as sitk

import numpy as np

fixed_ar = np.zeros([100, 100, 100], dtype=np.uint8)

fixed_ar[20:40,20:80,20:80] = 1

fixed_ar[40:60,40:60,40:60] = 1

fixed = sitk.GetImageFromArray(fixed_ar)

moving_ar = np.rot90(fixed_ar, k=2)

moving = sitk.GetImageFromArray(moving_ar)

sitk.WriteImage(fixed, 'fixed.mhd')

sitk.WriteImage(moving, 'moving.mhd')

init = sitk.CenteredTransformInitializer(fixed, moving, sitk.Similarity3DTransform())

R = sitk.ImageRegistrationMethod()

R.SetMetricAsMeanSquares()

R.SetMetricSamplingStrategy(R.RANDOM)

R.SetMetricSamplingPercentage(0.2)

R.SetOptimizerAsGradientDescent(learningRate=1.0, numberOfIterations=1000, convergenceMinimumValue=1e-6, convergenceWindowSize=10)

R.SetOptimizerScalesFromPhysicalShift()

R.SetInterpolator(sitk.sitkLinear)

R.SetInitialTransform(init)

R.AddCommand(sitk.sitkIterationEvent, lambda: print(R.GetOptimizerIteration(), R.GetMetricValue(), R.GetOptimizerLearningRate()))

tx = R.Execute(sitk.SignedMaurerDistanceMap(fixed), sitk.SignedMaurerDistanceMap(moving))

tx = tx.Downcast()

print()

print("Scaling", tx.GetScale())

print("Translation", tx.GetTranslation())

print("Rotation", tx.GetVersor())

resample = sitk.ResampleImageFilter()

resample.SetReferenceImage(moving)

resample.SetInterpolator(sitk.sitkNearestNeighbor)

resample.SetTransform(tx)

img = resample.Execute(moving)

sitk.WriteImage(img, 'out.mhd')

using SimpleITK 2.0.

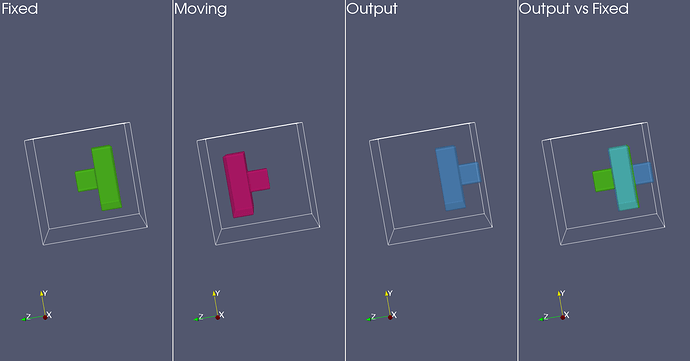

I have this shape rotated 180° around. However, the result found here is simply to move the moving image into the other along the z-axis and overlay the larger boxes.

I have to admit, that this example exaggerates the issue a bit, however I had here two images where they were approximately 40 to 180° rotated and the result was that the moving image was scaled down and put into the other one.

I wonder if this is a common issue and images should be aligned sufficiently good beforehand or if there is anything I can do here.

I played around with weighting the parameters of the Transformation using R.SetOptimizerWeights, but that would not give a good solution either. Also using different metrics, sampling methods or opimiziers would not give a different result.

. What you are experiencing is a feature not a bug, as a robust registration depends on robust initialization.

. What you are experiencing is a feature not a bug, as a robust registration depends on robust initialization.