Hi again,

I had more troubles in Windows and Linux compiling against ITK in the installed directory (the one defined in INSTALL_PREFIX), so I definitely used the BIN folder as suggested in this thread: Neural Networks Examples

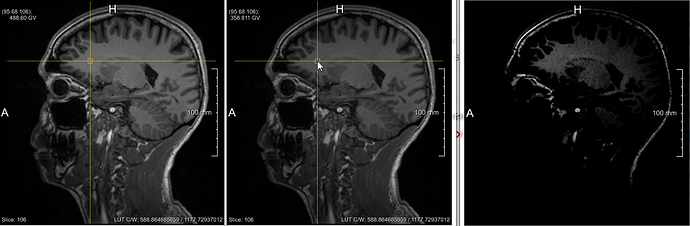

In addition to this, I’m able to reproduce same results in the two machines, Windows and Linux, using v5rc01. This is good news, but I was a bit surprised by the differences between v5rc01 and v4.11.0. The bias seems to be corrected in a different way. The following image shows in v4 in the left, v5 in the center and the difference between both in the right (after playing with contrast level). The differences are between -0.00715885 and 332.423. The cursor is located in a voxel where the differences are higher than 100…

I checked the last changes on the N4BiasFieldCorrection filter and I mostly saw improvements in performance, but then, which could be the reasons of such a change in the results? Overall, we have the impression that the results are better, but we cannot confirm this as we don’t have a validation dataset for this N4 correction.

Thanks,

Ricardo

RaC