hello, i want to retrieve image data from itk image, and load it in matlab.

transfer the data pixel by pixel is too slow, and there are no direct function like mxArray::new_from_buffer can do it, so i have an idea that pass the whole buffer as a 1*N array to matlab, and reshape the matrix to get a correct image.

Unfortunately the image is not correct as i expected,

the original image is 20202 * 12133 * 3:

get image data in itk cpp

itk::Image<PixelType, Dimension>::SizeType size = resampleF->GetOutput()->GetLargestPossibleRegion().GetSize();

void * dataPtr = resampleF->GetOutput()->GetPixelContainer()->GetBufferPointer();

long long unsigned int buffer_len = 3 * size[0] * size[1];

mwArray file_name(outFilename.c_str());

mwArray mwWidth(1, 1, mxUINT16_CLASS);

mwArray mwHeight(1, 1, mxUINT16_CLASS);

mwSize mdim = buffer_len;

mwArray mdisp_image(1, mdim, mxUINT8_CLASS, mxREAL);

mdisp_image.SetData((mxUint8*)dataPtr, mdim);

mwWidth.SetData(&size[0], 1);

mwHeight.SetData(&size[1], 1);

matlabProcessFuncFromDLL(mdisp_image, mwWidth, mwHeight, file_name);

process the image data in matlab matlabProcessFuncFromDLL

reshape(imgdata, [width, height, 3])

results like this

reshape(imgdata, [height, width, 3])

results

reshape and transpose it

reshape(imgdata, [width, height, 3])

imgdata= reshape(imgdata, [width, height, 3]);

T=affine2d([0 1 0;1 0 0;0 0 1]);

new_imgdata=imwarp(imgdata,T);

result:

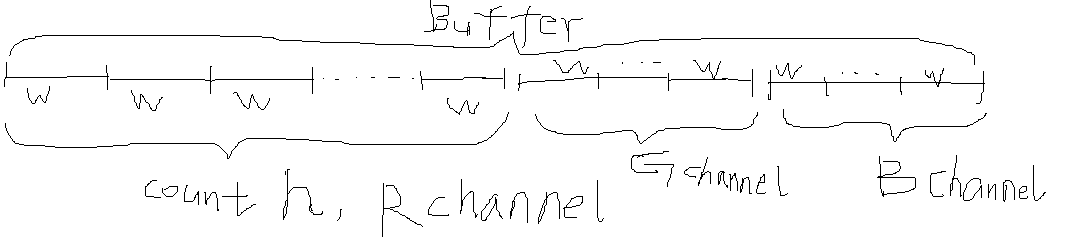

the image data return by GetBufferPointer structure is like this ryt?

total size is w * h * 3

is the way trans 1*N array to matlab and reshape the matrix to get a matlab image feasible, or something i understand is wrong. does Mr @matt.mccormick have any idea about this, as i notice you have a repo related to it