Authors: Matt McCormick ![]() , Mary Elise Dedicke

, Mary Elise Dedicke ![]() , Alex Remedios

, Alex Remedios ![]()

Reproducibility in Computational Experiments

Jupyter has emerged as a fundamental component in artificial intelligence (AI) solution development and scientific inquiry. Jupyter notebooks are prevelant in modern education, commercial applications, and academic research. The Insight Toolkit (ITK) is an open source, cross-platform toolkit for N-dimensional processing, segmentation, and registration used to obtain quantitative insights from medical, biomicroscopy, material science, and geoscience images. The ITK community highly values scientific reproducibility and software sustainability. As a result, advanced computational methods in the toolkit have a dramatically larger impact because they can be reproducibly applied in derived research or commercial applications.

Since ITK’s inception in 1999, there has been a focus on engineering practices that result in high-quality software. High-quality scientific software is driven by regression testing. The ITK project supported the development of CTest and CDash unit testing and software quality dashboard tools for use with the CMake build system. In the Python programming language, the pytest test driver helps developers write small, readable scripts that ensure their software will continue to work as expected. However, pytest can only test Python scripts by default, and errors in untested computational notebooks are more common than well-tested Python code.

In this post, we describe how ITK’s Python interface leverages nbmake, a simple, powerful tool that increases the quality of Jupyter notebooks through testing with the pytest test driver.

nbmake

nbmake is a pytest plugin that enables developers to verify that Jupyter notebook files run without error. This lets teams keep notebook-based documents and research up to date in an evolving project.

Quite a few libraries exist that relate to unit testing and notebooks:

- nbval is a strong fit for developers who want to check that cells always render to the same value

- testbook is maintained by the nteract community, and is good for testing functions written inside notebooks

- nbmake is popular for those maintaining documentation and research material; it programmatically runs notebooks from top to bottom to validate the contents

We used nbmake because of the simplicity of its adoption, integration with pytest, and the ability to test locally and in continuous integration (CI) testing systems like GitHub Actions.

Getting Started

A great first step for testing notebooks is to run nbmake with its default settings:

pip install nbmake # install the python library

pytest --nbmake my_notebook.ipynb # Invoke pytest with nbmake on a notebook

This simple command will detect a majority of common issues, such as import errors, in the notebook.

To add more detail on expected notebook content, simply add assertions in two notebook cells like this:

# my_notebook.ipynb

# %% Cell 1

x = 42

# %% Cell 2

assert x == 42

Use assertions to check your notebook is working as expected.

Note: If you want to present a cleaner version of the notebook without assertions, you can use Jupyter book to render it into a site and use the remove-cell tag to omit assertions from the output.

Tips for using nbmake

Starting to test any software package is always difficult, but once begun it becomes easier to maintain quality software.

If your directory contains an assortment of notebooks, you will find different blockers:

- some require kernels with different types and names

- some raise errors, expectedly or unexpectedly

- some require authentication and network dependencies

- some will take hours to run

Our advice is to start small.

Try running nbmake locally from your development environment on a single short notebook. If

that is a challenge, use the --nbmake-find-import-errors flag to only check for missing dependencies.

Once you have a minimal quality bar, you can think about what’s next for your testing

approach.

- Run software quality checks on CI

- Test more notebooks

- Reduce the test time by using

pytest-xdist - Use the

--overwriteflag to write the output notebooks to disk to build documentation or

commit to version control.

For complete docs and troubleshooting guides, see the nbmake GitHub repo.

ITK Use Cases

When creating spatial analysis processing pipelines, example code, notebook visualization tools, and documents, ITK leverages nbmake to ensure high-quality, easy-to-maintain documentation without common import errors or unexpected errors.

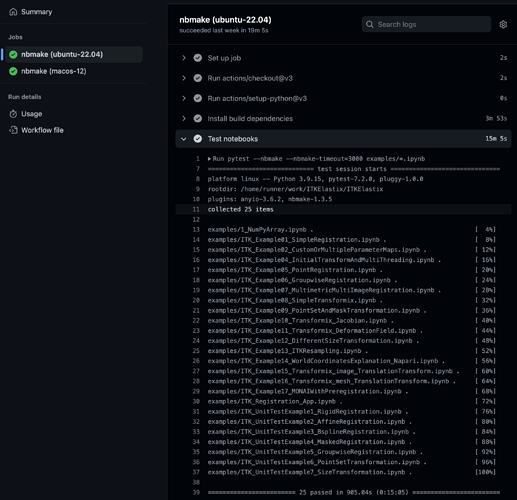

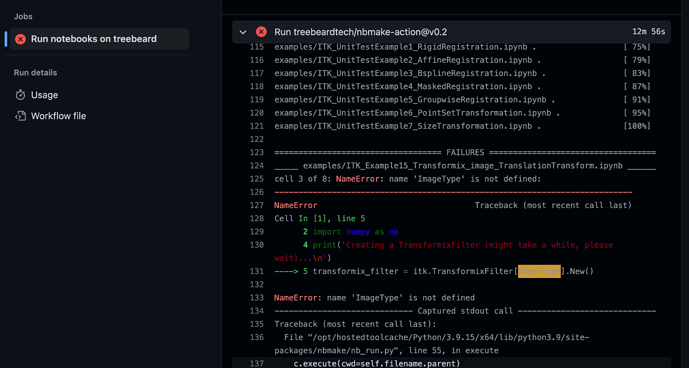

In ITK extensions such as ITKIOScanco (a module to work with 3D microtomography volumes) or ITKElastix (a toolbox for rigid and nonrigid registration of images), nbmake runs notebooks in GitHub Actions CI tests.

ITKElastix GitHub Actions notebook test output, displaying standard pytest status information.

CI testing catching an error and displaying traceback information via pytest.

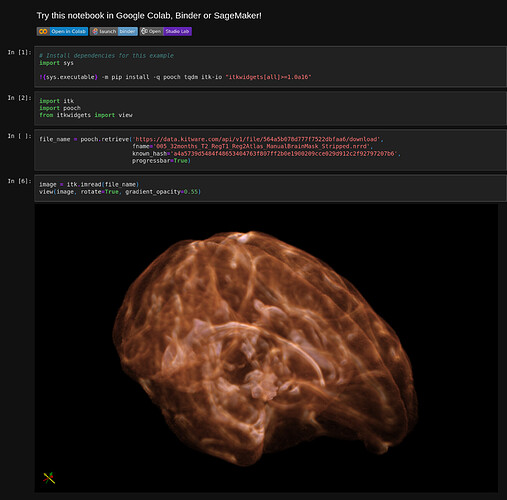

itkwidgets, a next-generation, simple 3D visualization tool for Python notebooks, uses nbmake to check its functionality.

An itkwidgets visualization notebook tested with nbmake.

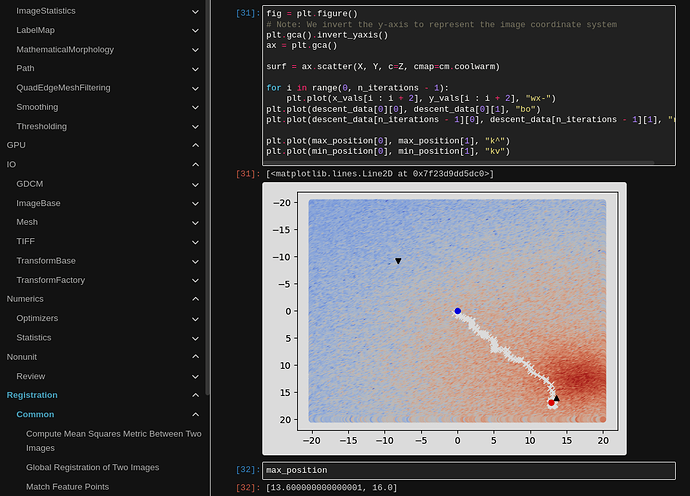

In ITK’s Sphinx examples, notebooks are embedded into HTML documentation and continuously tested.

An explanation of optimization during image registration. The notebook is rendered in Sphinx and tested with nbmake.

Next Steps

While notebooks have been around for some time, we are still learning how to integrate them into rigorous engineering processes. The nbmake project is continuing to develop ways to remove friction associated with maintaining quality notebooks in many organizations.

Over the next year, there will be updates to both the missing imports checker (to keep virtual environments up to date) and the mocking system (to skip slow and complex cells during testing).

Nbmake is maintained by treebeard.io, a machine learning infrastructure company based in

the UK. ITK is a NumFOCUS project with commercial supported offered by Kitware, an open source scientific computing company headquartered in the USA.