hello @zivy sor for the idiot question, does “apply it to the original three channel image” means that apply the correction steps to all the 3 channels like below

I = (

I * sitk.Cast(I <= 0.0031308, sitk.sitkFloat32) * 12.92

+ I ** (1 / 2.4) * sitk.Cast(I > 0.0031308, sitk.sitkFloat32) * 1.055

- 0.055

)

def gamma_correction(I):

# nonlinear gamma correction

I = (

I * sitk.Cast(I <= 0.0031308, sitk.sitkFloat32) * 12.92

+ I ** (1 / 2.4) * sitk.Cast(I > 0.0031308, sitk.sitkFloat32) * 1.055

- 0.055

)

return sitk.Cast(sitk.RescaleIntensity(I), sitk.sitkUInt8)

channels = [

sitk.VectorIndexSelectionCast(color_input_image, i, sitk.sitkFloat32)

for i in range(color_input_image.GetNumberOfComponentsPerPixel())

]

res = [gamma_correction(c) for c in channels]

and then compose 3 channel to rgb

corrected_image_full_resolution = sitk.Compose(res)

corrected_image_full_resolution = sitk.Cast(corrected_image_full_resolution, sitk.sitkVectorUInt8)

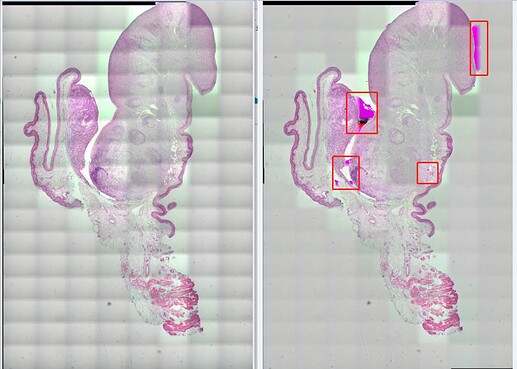

this did not work to even the illumination

or it means that just replace the sitk.ReadImage(image_path, sitk.sitkFloat32) to srgb2gray result

like this below

def srgb2gray(image):

# Convert sRGB image to gray scale and rescale results to [0,255]

channels = [

sitk.VectorIndexSelectionCast(image, i, sitk.sitkFloat32)

for i in range(image.GetNumberOfComponentsPerPixel())

]

# linear mapping

I = 1 / 255.0 * (0.2126 * channels[0] + 0.7152 * channels[1] + 0.0722 * channels[2])

# nonlinear gamma correction

I = (

I * sitk.Cast(I <= 0.0031308, sitk.sitkFloat32) * 12.92

+ I ** (1 / 2.4) * sitk.Cast(I > 0.0031308, sitk.sitkFloat32) * 1.055

- 0.055

)

# i change sitkUInt8 to sitkFloat32 as the N4BiasFieldCorrectionImageFilter didn't support sitkUInt8

return sitk.Cast(sitk.RescaleIntensity(I), sitk.sitkFloat32)

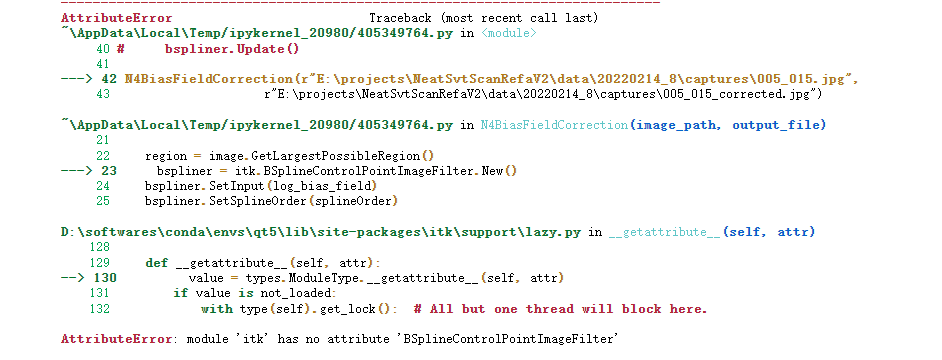

def N4BiasFieldCorrection(image_path, output_file):

color_input_image = sitk.ReadImage(image_path)

inputImage = srgb2gray(color_input_image)

image = inputImage

maskImage = sitk.OtsuThreshold(inputImage, 0, 1, 200)

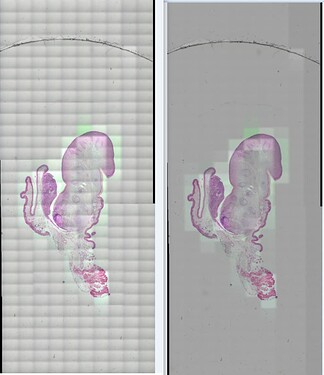

image = sitk.Shrink(inputImage,

[int(16)] * inputImage.GetDimension())

maskImage = sitk.Shrink(maskImage,

[int(16)] * inputImage.GetDimension())

corrector = sitk.N4BiasFieldCorrectionImageFilter()

corrector.SetSplineOrder(3)

corrector.SetWienerFilterNoise(0.01)

corrector.SetBiasFieldFullWidthAtHalfMaximum(0.15)

corrector.SetConvergenceThreshold(0.00001)

corrector.SetMaximumNumberOfIterations([1, 1, 1, 1])

corrected_image = corrector.Execute(image , maskImage)

log_bias_field = corrector.GetLogBiasFieldAsImage(inputImage)

bias_field = sitk.Exp(log_bias_field)

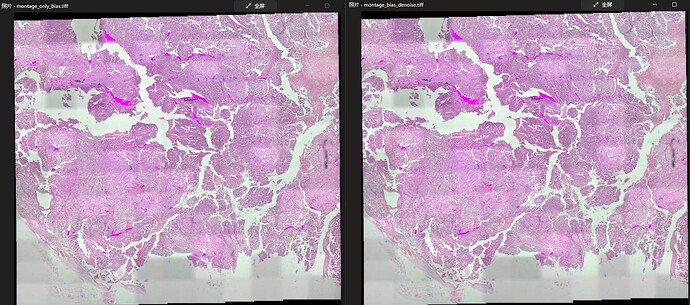

corrected_image_full_resolution = sitk.Compose([sitk.VectorIndexSelectionCast(color_input_image, i, sitk.sitkFloat32)/bias_field for i in range(3)])

corrected_image_full_resolution = sitk.Cast(corrected_image_full_resolution, sitk.sitkVectorUInt8)

sitk.WriteImage(corrected_image_full_resolution, output_file)

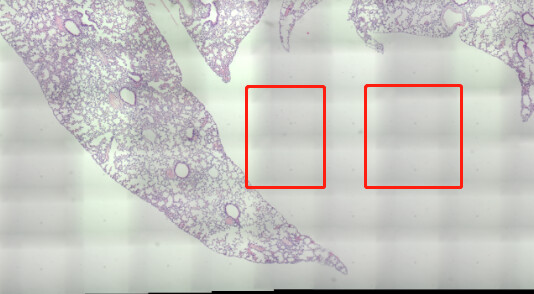

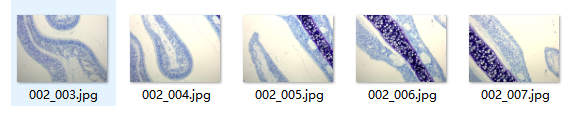

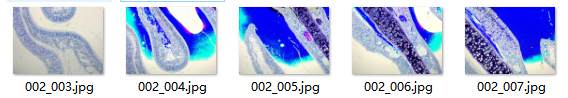

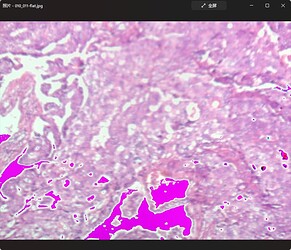

the color changed in its result too, and it does gamma correction twice i think

if both the 2 version above not what you mean, how to apply it to the original three channel image, i know rgb image can trans to hsv image, the v channel represent the intensity, but grey image only has one channel, how can i extract its intensity features and apply it to the original three channel